Building Data-Driven Growth in Retail: 8 Strategic Lessons for Senior Leaders 4 Dec 1:50 AM (19 days ago)

How to overcome the modern challenges of growth using a data-centric approach to digital transformation.

Thank you to everyone who joined our recent webinar on data-driven growth in retail just a few weeks ago!

We shared a behind-the-scenes look at one of our longest-standing client partnerships – spanning analytics, digital strategy, and deep transformation programmes for over a decade. The conversation that followed was greatly relevant to anyone navigating data-led change today.

While the event was one-time only, we are pleased to share a summary of 8 themes that stood out as being especially resonant for leaders and those responsible for building modern, data-centric organisations.

In this summary, we’ll discuss the practical lessons and cultural shifts required to navigate the ever-changing role of data in building (and sustaining) commercial growth in retail.

1. Digital transformation requires near-term proof but is ultimately a long game.

One of the strongest threads throughout the conversation was that data-led transformation takes time, especially inside complex, established businesses.

However – while the full transformation may span years – traction should be visible quickly. Early wins – even small ones – are essential to building belief, unlocking further investment, and shifting organisational habits.

This dual horizon is where senior leaders create the most value:

- Short-term: show meaningful impact quickly.

- Long-term: stay focused on the organisational capability you’re building.

Balancing both is difficult, but essential. Digital transformation doesn’t happen overnight. Organisations evolve over years, not quarters, and the change requires patience and continual adjustment.

2. Culture, not technology, will determine your pace and success.

The discussion returned to a core truth: technology can be the easy part – tools are plentiful, platforms mature quickly, and vendors are always evolving their offer.

However, cultural attributes (decision-making behaviour, trust in data, cross-functional alignment, willingness to test) can be the bottleneck and the multiplier.

Data-driven organisations share certain cultural characteristics:

- A shift from gut feel to evidence

- Comfort with experimentation and iteration

- Openness to cross-functional collaboration

- Removal of politics from performance discussions

- Leaders modelling curiosity rather than absolute certainty

Digital transformation succeeds when culture is actively designed around these characteristics, not passively inherited. Creating momentum often begins with small, visible wins that demonstrate the value of data in everyday decisions.

3. Analytics must begin with clearly defined commercial questions and real business problems.

Senior leaders often face pressure to adopt ‘the latest thing’ – new platforms, new channels, new capabilities, new buzzwords. But our discussion emphasised that analytics truly makes an impact when it supports real commercial challenges or questions.

Companies making the most progress start by asking:

- What decisions are we trying to improve?

- Which levers create return?

- Where is the business carrying risk, assumption or inefficiency?

Once the real business questions are identified, data becomes an accelerator.

For leaders, this means resisting tool-led thinking and holding teams to outcome-led conversations.

4. Experimentation and test-and-learn are still essential, not optional.

The highest-performing digital and retail teams have been operating through continuous test-and-learn to drive growth for some years now – embedding experimentation into marketing, product, UX, e-commerce and even operational workflows.

The discussion highlighted the importance of:

- Clear hypotheses

- Strong measurement frameworks

- Willingness to accept and learn from failed tests

- Developing processes that make experimentation repeatable

For senior leaders, continuing to champion experimentation isn’t just an ongoing tactic; it’s a deeply-embedded organisational philosophy that removes doubt, speeds up decisions, and centralises learning.

5. Organisational alignment unlocks compounding advantage.

No team can become data-driven alone. Analytics teams need marketers and product owners to adopt new behaviours. Marketers need reliable data foundations. Product teams need clarity on commercial priorities. Commercial teams need actionable insight.

Where organisations succeed, we see:

- Shared language around data

- Clear ownership of metrics

- Education embedded into the business

- “Evangelists” who influence their functions from within

- Leaders modelling desired behaviours

The discussion reinforced that alignment is not a by-product – it’s a deliverable. When alignment increases, the impact of every team multiplies and the organisation moves forward together.

6. The right partnerships help build manageable growth.

At the risk of sounding biased: the value of external partners was another important thread throughout the discussion – not just as suppliers, but as accelerators of transformation.

External expertise can help organisations:

- Move quickly when internal teams are small

- Reduce risk when making foundational technology decisions

- Access skills not yet mature internally

- Navigate legacy complexity

- Bring best-practice thinking from adjacent sectors

Successful digital transformations typically blend external capability early and internal capability growth later, ensuring knowledge transfer and long-term sustainability.

7. AI represents huge opportunity but must be thoroughly governed and purpose-led.

The conversation acknowledged AI’s rapidly expanding role in retail and digital. But rather than hype, the dominant sentiment was adoption with intentionality.

For senior leaders, the imperatives are clear:

- Define what AI means in your organisation

- Put guardrails and governance in place

- Link AI initiatives directly to business outcomes

- Prioritise safety, compliance, and trust

- Use your own data or ‘custom AI’ to drive differentiated value

For many organisations, the impact of AI is already being felt across operations, customer experience, search, content, and more. For leaders, developing a clear sense of direction is essential in embracing this change responsibly.

8. Your data strategy will always help you answer the difficult, commercial questions with ease.

Finally, the session reinforced that data is not a reporting function – it’s a strategic capability that helps respond to the sticky questions leaders may struggle with most:

- Where should we invest and at what rate?

- What type of growth is realistically achievable?

- Where is profitability being eroded?

- Which levers matter most for performance?

When designed carefully, your vision for data (or your data strategy) becomes a mechanism for better trade-offs, clearer prioritisation, and more confident leadership. Strong measurement frameworks and clear financial thinking help ensure that data isn’t just a box that you’re ticking or something that you’re responding to reactively, but it is ‘always-on’, relevant, and aligned to support your decisions.

A final thank you

Thank you once again to everyone who joined us. The quality of questions and openness of the discussion highlighted just how complex data-driven growth has become for modern organisations. Many principles transcended the retail sector alone and apply to a foundational way of thinking that leaders must adopt to continue seeing impact.

If you’d like to discuss any of these themes further or explore any of the data and analytics challenges your organisation may be facing, feel free to get in touch and speak to a friendly expert from our team.

We look forward to continuing the dialogue at future events, and helping to support your next chapter of digital transformation and data-driven growth.

The post Building Data-Driven Growth in Retail: 8 Strategic Lessons for Senior Leaders appeared first on Lynchpin.

Unfiltered: Adobe Analytics – Key takeaways from session #2 13 Nov 2:00 AM (last month)

Tips to strengthen engagement, ideation, and foster a culture of experimentation using Adobe Analytics, Adobe Target, and more.

Our recent Unfiltered: Adobe Analytics session focused on how organisations can enhance experimentation practices and ideation using Adobe Target, Adobe Analytics, alongside complementary platforms such as FullStory and Mixpanel.

Participants explored practical approaches to hypothesis development, A/B testing, driving user engagement, and developed some tips for creating a successful culture of continuous learning and experimentation.

Below is a quick summary of 8 key takeaways from the discussion which you can begin applying to your own analytics practice today.

8 ways to strengthen tool engagement and experimentation culture in your organisation:

1. Align objectives and KPIS: Ensure personal objectives are aligned with business objectives and that the impact is codified. We noted that establishing some KPIs that link clearly to business goals and experimentation outcomes helps define clear expectations for success.

2. Establish a semantic layer: Agree consistent definitions for all key metrics. Employ a Semantic Layer to create an agreed-upon, single source of truth (e.g., what exactly constitutes your “monthly active users” to avoid it being subject of debate later).

3. Define success upfront: Avoid ambiguity later by codifying what success looks like in business commercial terms and how it will be measured before the test results come in. Again, this prevents confusion and subjective debate afterwards.

4. Adopt a unified customer journey framework: Align metrics and priorities around shared understanding of the full customer lifecycle. Agree on your organisation’s unified customer journey and understand the upper to middle experience and major points of opportunity beyond just final conversion. This helps to view the holistic picture, highlight any problems (e.g. acquisition cost), and observe any negative knock-on effects – e.g. a successful test to reduce bounce rate at the top of funnel could increase low-quality traffic further into the funnel or perhaps in other directions, affecting measurement more broadly across products or departments.

5. Don’t trust averages… Instead, question “what’s that average hiding?” – check for differences in performance across segments (e.g. devices type – desktop vs. mobile) or cohorts to find genuine learnings and uncover hidden trends rather than baking in any generalised, face-value insights into your onward decisioning.

6. Embrace and communicate “failures”: This tip comes up often because it’s difficult to do. Sharing lessons from unexpected or negative results often represent savings if reframed in commercial terms – e.g. ‘We saved X by not doing Y’ – and always provide valuable learnings for your team. Communicating these failures or unexpected results fosters a culture where it’s okay to fall, get up, and pivot to the truth or your next best set of actions quicker.

7. Create champions and super users: Take the time to Identify, empower, and support enthusiastic advocates or ‘super users’ across teams. These users multiply your ability to reach/influence the business and accelerate adoption and enablement.

- Provide shared spaces to communicate and celebrate success: Use Slack/Teams channels, in-person or digital meetings where super users can discuss ideas, share knowledge, and celebrate incremental progress and bigger successes.

- Continuous education and reinforcement: Provide ongoing training and refreshers to sustain engagement and skill development. Continuously educate and reaffirm the process, resources, definitions, and guidance – remember these are all sustained efforts, not one-time events.

8. Gamify participation: Use friendly competition and success stories to boost enthusiasm and collaboration. Develop and share narratives that spotlight any progress and help contribute to light-hearted competition across teams or departments – e.g., a quick message: ‘of the 20 ideas we had, we validated 4 and are currently seeing a 5% decrease in customer support requests’.

Concluding insights:

- Building maturity with Adobe Target and Analytics requires not just technical proficiency but organisational alignment and cultural buy-in to be aware of.

- Testing should be collaborative, inclusive, and celebrated effort, not siloed or fear-driven.

- ‘Super users’ and champions play a vital role in driving sustained engagement, bridging gaps between technical teams and the wider business.

- Ultimately, success depends on clear processes, shared definitions, transparency, and a culture of curiosity.

Continuing the conversation:

We welcome you to join us at our next user group event to connect with peers, share your challenges, and discover ways to drive analytics forward by addressing common Adobe Analytics pain points.

Secure your spot at upcoming events

Secure your spot at upcoming events

Need personalised support?

Our team at Lynchpin turns scattered data into clear insight so leaders can act fast. Independent since 2005, our experts are here to help with all of your data and analytics challenges.

We have deep vendor-neutral skills across the full analytics stack – supporting leading organisations such as Channel 5, Canon, Hotel Chocolat, John Lewis Finance, AbbVie, and more, turning data into revenue lift, lower acquisition costs, and happier customers.

The post Unfiltered: Adobe Analytics – Key takeaways from session #2 appeared first on Lynchpin.

CDP, what is it good for? – What do Customer Data Platforms do? Do you really need one – and if so, which type? 30 Oct 2:57 AM (last month)

The CDP (Customer Data Platform) market is now so broad with such a range of scopes and capabilities that it’s increasingly hard to make sense of it as one vendor category.

Asking “Do I need to invest in a CDP?” is becoming akin to asking “Do I need to invest in a Transport Enabling Device?” – if that ‘TED’ category included wheels, engines, ropes and batteries alongside full-blown automobiles and yachts.

Set against that scope for confusion, the potential value of first party customer data is far clearer; the question then becomes which technology is best to bring that value to fruition?

In this article we’ll explore the practicalities of what different flavours of CDP do and don’t do, which use cases they support, and whether you in fact need any of them at all.

What (actually) is a CDP?

Often the simplest question is the most important! And in the case of CDPs, the answer can span a broad assortment of responses depending on who – or rather which vendor – you ask.

Functionally, CDPs typically purport to do all or some of the following:

- Storage – act as a repository for data points associated with a prospect or customer (or related identifier).

- Identify Resolution – merge the facts and behaviours that have been gathered about users when it becomes possible to identify them as being related to the same person.

- Enrichment – act as a data clean room to extend first party data with third party data attributes via anonymised sharing.

- Segmentation – build segments of customers or prospects to be targeted with (or excluded from) specific propositions, campaigns, communications or offers.

- Activation – turn those segments into channel outcomes such as personalised website experiences, triggered emails and targeted advertising.

Some CDPs cover everything above and aim to become master data repositories for large portions of your customer data at their core. At the other end of the spectrum, some CDPs only do activation and lean on other existing platforms to do everything else – sometimes these are referred to as “composable CDPs”.

And then CDP-like functionality is increasingly baked into other CRM, CMS and analytics platforms.

Google Analytics 4 stores behavioural data, can resolve identity across mobile apps and websites, segment and then activate audiences within Google Ads: it’s basically a CDP, albeit one very much focused on activating a subset of data within the Google advertising ecosystem.

Your email marketing platform can upload customer lists to Meta for advertising – it’s becoming a CDP.

Your content management system tracks and segments behaviours and uses it to drive personalisation – it’s joined the CDP party too.

Architecturally, in a modern tech stack there are so many platforms that are incident on customer data that conceptually pretty much anything can call itself a CDP.

Which brings us on to…

Use Cases

If use cases for a CDP are not clearly defined and prioritised, then it’s impossible to evaluate what a smart technology investment might be in this space.

Good use cases define how specific data drives specific outcomes, using clear language of how the customer is impacted. For example:

- “Exclude existing customers from our Meta acquisition advertising”

- “Trigger reactivation emails when a customer has not been active on the website or mobile app for a month”

- “Instigate customer service follow-up calls when high value customers abandon an online support process”

Even better use cases put a potential business value on the outcomes, and hence can help with prioritisation.

Less good use cases focus on generic capabilities as opposed to specific customer outcomes, and might deploy industry buzzwords that are conveniently open to interpretation:

- “Drive real-time omni-channel personalisation”

- “Provide a single view of the customer”

Gap Analysis

Armed with use cases, any evaluation of CDP capabilities can then be focused on what you’re specifically looking to enable, and what the functional gap is in terms of storage, identity resolution, enrichment, segmentation and/or activation.

For example:

- If your CRM already has a segment of customers you want to exclude from advertising, and that CRM also has a connector to Meta to upload it, then you don’t need a CDP at all (for that use case).

- If you already have a data warehouse of behavioural data from web and mobile app usage (e.g. Google BigQuery), then you perhaps only need some activation CDP capability to connect that to your email marketing platform for triggered emails.

There is also a strategic gap analysis in relation to other technology plans. For example, if the organisation is already building out a data warehouse with segmentation capabilities in Databricks, then you may not want to be building out what will essentially become a duplicate data warehouse with segmentation capabilities in a Oracle CDP.

Finally, one attribute to probe clearly is the one of “real time”. Ultimately nothing is real time – there’s always an acceptance of whether it is tolerable to wait milliseconds, seconds, hours or indeed a day for a changing data point in one place to manifest into a change in treatment for a customer elsewhere. But some data points don’t change that quickly (e.g. customer age), or can be potentially better localised in the channel in which they occur (e.g. personalising a page based solely on the previous page viewed).

Segmenting The Market

Just like understanding the diversity of your own customer base, segmenting the CDP market is a good way to break down their capability focus.

To aid clarity (or controversy), the quadrant map below adds some keywords to the Customer Data Platform labels to emphasise the practical focus of these systems, with some examples (non-comprehensive) of vendors operating in these segments.

The Classics (Put all your Customer Data in our Platform)

Sometimes referred to as “Legacy CDPs” by their composable colleagues, a more polite emphasis is to set these out as platforms that aim to do the full spectrum of storage, identity resolution, segmentation and activation. Critically the starting point tends to be onboarding substantial amounts of data into the platform, which can result in a duplication of storage versus those source platforms (especially if one of them is an existing data warehouse).

The Enrichers (Match your Customer Data with our data Platform)

Often not dissimilar to the Classics in functionality, but with added data clean rooms and proprietary third-party data, so a big focus on enrichment (and a desire to accumulate as much client data as possible to enable that). Often have grown out of previous Data Management Platforms that did similar enrichment based on cookie data for advertisers.

The Orchestrators (Activate your Customer Data in our Platform)

An overlap with marketing automation means some platforms can major on the orchestration of triggered marketing, especially through direct response channels such as email or SMS, as part of the CDP proposition.

The Composables (Get your Customer Data out of an existing Platform)

Whereas the Composable CDPs are typically more focused on activating data from an existing data warehouse via external channels and tools, operating essentially as connectors to synchronise data from A to B. The theory being you can make use of your existing technology platforms for storage and plug a far more specific capability gap with these; some will offer segmentation and identity resolution as optional modules.

Pricing

If you are using the full gamut of CDP capabilities, rate cards can be complex, and anticipating what your “data tax” might end up as can be especially challenging, even with a calculator.

Even for composable (activation only) CDPs, the pricing models can vary quite significantly. Some charge by the connector, some by the number of times those connectors are “synchronised”, some by the number of underlying customer records irrespective of connector usage, some at a completely flat fee irrespective of anything else.

This all makes the ROI equation… complicated. But far less complicated if you have mapped out your use cases and can therefore cost some very specific scenarios and see how the costs scale in practice. In fact, without doing that, you could be signing a blank cheque.

Other Gotchas

“We integrate with X hundred connectors” – but what kind of connector? Dig into the documentation for some CDPs and that “connector” is a batch SFTP upload rather than anything more real time into an API. That might be all you need but definitely probe the capabilities and limitations of the connectors you know you will be leaning on to enable your use cases.

Some CDPs (here’s looking at you, Adobe) require you to map your data into a proprietary schema before you can start working with it. If their schema fits your business model and data sources, great, if not, it could be a substantial challenge and overhead to shoe-horn into.

The Non-CDP

Extract Transform and Load (ETL) is the process of getting data out of an API and into a data warehouse. And Reverse ETL is the process of getting it out of a data warehouse and into an API. What’s the difference between a Reverse ETL Solution and a Composable CDP? Potentially not a lot, apart from the name. And increasingly, cloud data warehouses may integrate their own reverse ETL connectors and reduce the market for composable CDPs as a result.

To Sum Up

Should the CDP vendor category even exist anymore?

Perhaps not: cloud data warehouses are getting increasingly functional across areas that CDPs traditionally covered as independent products. And the direction of travel is both towards far more point solutions for specific capabilities (e.g. activation) alongside CDP-like functionality being integrated into a myriad of other experience and marketing automation platforms.

Stepping back, we all need to join the dots with customer data and focus on what makes it valuable – to us and to them. Nail your specific use cases and it becomes much easier to identify what capabilities across that storage, identity resolution, enrichment, segmentation and activation are required, and what is missing. Let that gap analysis firmly set the scene for how you look at solutions in this space and you’re far less likely to end up mis-sold or lost.

As an independent consultancy, we’re always here to help navigate the market, help you make the most of what you’ve got already and make smart choices for now and the future.

The post CDP, what is it good for? – What do Customer Data Platforms do? Do you really need one – and if so, which type? appeared first on Lynchpin.

Unfiltered: Adobe Analytics – Key takeaways from session #1 9 Oct 3:10 AM (2 months ago)

Adobe CJA usage; restoring shaken trust, securing buy-in, supporting data enablement, self-serve, and governance.

Our recent Unfiltered: Adobe Analytics session brought together leading professionals to share candid insights on major industry shifts, including the rise of Customer Journey Analytics (CJA), the future of Adobe Analytics, and a range of practical strategies to secure buy-in, improve governance, and build trust, and prove value across the business.

Below is a quick summary of 5 themes and strategies from the discussion that you can begin applying to your own analytics practice today:

1) CJA is promising but adoption remains limited

CJA promises a simple, unified view of journeys – but uptake in the UK appears low despite an aggressive push from Adobe. Reasons may include migration complexity (complex schemas and no simple route to switch), a perceived capability gap with CJA vs Adobe Analytics, reliance on the Edge Platform/AEP, and cost concerns.

More technical Adobe Analytics users (in particular) may see little to no reason to make the switch; those who are proficient in SQL and stitching and enriching data in platforms like Snowflake will need unique benefits, beyond what they can already deliver.

2) Vendor trust matters – Have recent public mix-ups shaken confidence?

Recent Adobe Edge routing issues (publicly acknowledged by Adobe within their status updates) may have dented the confidence of certain users.

In the case of CJA uptake – many organisations might now demand even clearer, safer migration paths; demonstrable business cases that showcase ROI in terms of risk versus reward; and reliability assurances before committing any further.

3) Mastering buy-in: Creating business cases with clear user benefits and measurable hooks

To avoid “new toy” syndrome, frame the adoption of new tools and builds around tangible benefits: faster insights, broader access (mobile or low-friction delivery), and personal measures senior leaders care about (their specific ability to access a specific dashboard on-the-go, for example).

Secondly – taking the initiative to share small, surprising insights can unlock effective proof of value. Build the case for further investment by highlighting what works well already – inspire curiosity around the business, challenge preconceived ideas, and showcase the potential for more.

4) Supporting enablement and self-serve

Self-serve continues to be an ongoing aspiration for many teams, but it only really succeeds with ongoing enablement.

Establish internal training – Set up regular drop-in sessions or personalised sessions for specific departments or roles to help inspire the wider business overcome perceived learning curves and improve data literacy. This comes with the additional benefit of fostering stronger internal relationships and creating important feedback loops.

Top Tip: The goal isn’t just to complete the training; it’s to motivate consistent application beyond that first session. By providing short, personalised takeaway exercises you can get users hooked on applying their new skills and forming good measurement habits.

5) Building a foundation of trust with governance and strong communication

Adobe Analytics can be a trickier platform to use than some teams may be used to. Simplify interpretation and help end users quickly get over the initial ‘hump’ using custom dashboards, pre-selected lists of dimensions or metrics, and Workspaces shipped with their essentials, so users understand what they’re seeing.

Acknowledge and communicate all known issues within your implementation (like outdated eVars, mislabelling, etc) – by informing users about what to ignore, you instil trust. In our experience, little quirks in an implementation can be the source of organisation-wide confusion. Custom documentation or labelling may be helpful here.

Remember that experimentation and personalisation initiatives need discipline and explainable context to thrive. Encourage teams to respect testing timelines, limit premature access to live results, and convert inconclusive outcomes into actionable learnings that inform follow-up tests. By owning and reframing the failures, such as an inconclusive A/B test, you can build transparency and credibility for your team, instead of sweeping findings under the rug.

What’s next for you analytics strategy?

The session inspired unique viewpoints of real-world strategies to drive measurement in Adobe Analytics, with respect to business complexities and complexities around the tool itself. The session made clear that technology alone won’t drive adoption – clarity of use cases, simple processes, clear governance and compelling business benefits will.

We welcome you to join us at our next event to connect with peers, share your challenges, and discover how to drive analytics forward by surfacing and addressing these common pain points head-on.

Secure your spot at upcoming events

Secure your spot at upcoming events

Want personalised support?

Our team at Lynchpin turns scattered data into clear insight so leaders can act fast. Independent since 2005, our experts are here to help with all of your data and analytics challenges. We have deep vendor-neutral skills across the full analytics stack – supporting leading organisations such as Channel 5, Canon, Hotel Chocolat, John Lewis Finance, AbbVie, and more, turning data into revenue lift, lower acquisition costs, and happier customers.

The post Unfiltered: Adobe Analytics – Key takeaways from session #1 appeared first on Lynchpin.

20 Years of Analytics: What Does the Data Say? 5 Sep 1:29 AM (3 months ago)

2025 marks 20 years since Lynchpin started trading, and 25 years for me personally working in the field of data and analytics (isn’t it nice when the numbers line up so beautifully!).

There’s been plenty of exciting developments and change over those decades, but also some unbending truths that haven’t really shifted an inch. In the context of the latest AI wave, it’s well timed to reflect on some of the key shifts and anchors over the past couple of decades, and how they might project forward based on that experience.

Web Analytics Goes Full Circle

In 2000, web analytics was all about counting – vanity metrics were popular with Venture Capitalists – and the ability to track end-to-end marketing performance was rarely even articulated as a need.

The gold standard of “how many visitors?” was key to pumping up valuations, but of course nobody could agree how “a visitor” should be defined. The Audit Bureau of Circulation – who were more used to auditing newspaper circulation figures – stepped in to provide an industry standard that could be calculated in 3 completely different ways, and my first foray into web analytics was writing Perl scripts that would churn through web server logs at an early dot com business to give the magic audited number that would keep the investors happy.

In the early noughties, the web analytics industry was exploding with platforms that put not very useful and not very performant front ends on top of clickstream data. And then that market consolidated in what felt like a heartbeat when Google released a free analytics tool in 2006. But to answer any real business questions, the only real solution was still to stick the raw data into a database and start to build your own data model.

At Lynchpin we bit the bullet early and literally racked up database servers in a datacentre (this was pre-cloud) to answer those key questions: what was the real customer journey across display and search (in 2008), how did publishing recency and frequency affect search visibility and hence reach for newspapers globally (in 2009), how could you model the long term impact of content on long term customer acquisition (in 2014).

We also learned early on that to effect actual change you needed to give people tools, not just reports, that they could use in their day-to-day decision making. We were super early adopters of Tableau back in 2008 (Power BI didn’t appear until 2015) to arm our clients with role-focused tools that gave them data-driven recommendations and scenario planning aligned with the levers they could pull around trading, merchandising, pricing and budget allocation.

Fast forward to today, and it does make me smile a little to see the de rigueur model for enterprise digital analytics is converging on just sticking the raw clickstream data into a data warehouse and taking it from there – accelerated not least by Google Analytics 4 being arguably a step back in terms of user interface coupled with a convenient free stream into BigQuery.

And with the shifts in consent for digital measurement and the stealthy introduction of modelled data into the platforms, the old question of “what exactly is a visitor” still feels very relevant.

So, a complete full circle? Not quite. What has changed is the accessibility and affordability of compute (which is a recurring theme throughout this reflection): a small cloud processing bill rather than a rack of servers as the entry point for really getting stuck into digital data. What hasn’t changed is the importance of getting the architecture and data model right and the challenge of successfully aligning the outputs with the business outcomes.

Measuring Behavioural Shifts

It’s very human to assume the rest of the planet thinks and behaves as we do, and a consistent danger point in analysis has always been assuming the average represents everyone and then missing the more subtle and slowly shifting tides of different underlying segments.

Throughout the twenty-tens there were at least 5 “This is The Year of Mobile!” pronouncements I can recall; in reality, none of those years were “the year of mobile” and every year was “a year of slightly more mobile”. The percentage shares slowly shifted, at different rates in different demographics, and at the same time the internet started splitting into Apple/Safari/iOS “privacy first” versus Google/Android/Chrome “advertising first” worlds.

I suspect a similar thing is about to happen with shifts from keyword search to prompts to agentic delegation, set against potential cravings for more direct engagement with “authentic” humans. I’d certainly be surprised if it’s a uniform transition across all audiences in all contexts.

Just like the “year(s) of mobile”, the businesses that measure and understand the nuances of these shifts in terms of their customers will be the ones that will successfully tap into the opportunities and manage the threats. And each new mode of engagement drives an important challenge to address in terms of how to measure that engagement in the first place.

Follow The Money

Or at least watch it. Flows of capital determine rates of progress but also define (sometimes inflated) expectations of value that need to be realised to give a return on that capital.

When Uber was doing its initial market entry, a friend used to cheerily remind me that for each unfathomably cheap ride a generous VC had basically put their hands directly into their pockets to pay a chunk. It was all subsidised to grab the market… until it wasn’t anymore.

Similarly, every new wave of technology progress is heavily subsidised… until it isn’t anymore and the investors that won the market share race want the return for their risk: cloud compute, data lakehouses, customer data platforms, large language models.

A comment that stuck with me from an early debate about the relative merits of the different cloud providers was the observation that all of them were ultimately just reselling electricity packaged up into different units of consumption.

That comment feels even more relevant now that the cloud providers are literally building their own power stations to fuel AI usage, and the question of the sustainability of our accelerating compute addiction is an increasingly real environmental issue. Quantum computing is genuinely exciting because of its potential to rewrite the compute vs electricity relationship, but given how long term and uncertain the pay-off, will the capital flows deliver it in time?

Regulatory Lag

In contrast to the capital flows that seek to define the future, regulation has often struggled to shut doors that have been blowing wide open based on the rate of change and legislative lag. Reflecting on the past 20 years it’s often felt like we’ve been doubling down on trying to fix the last but one issue in data privacy while newer and much bigger risks run unchecked.

To start on a more positive note, I’d venture GDPR has stood the test of time as actually being a decent piece of legislation. Mainly because the principles of GDPR all align well with good principles of data strategy – define your use cases and process data with clear purpose.

By contrast, the legislative failure to properly integrate e-Privacy and GDPR in Europe has been a slow-motion car crash of incomprehensible cookie consent dialogs. I don’t think when Tim Berners Lee invented the world wide web his vision was that everyone would be reviewing and accepting a new set of legal terms and conditions for every hyperlink they followed. The reality is that cookie consent should have been a browser standard and the industry failing to pull together on that or put aside some of their vested interests destroyed the user experience.

Meanwhile, other potentially far more intrusive data collection (mobile apps and location spring to mind) ran relatively unchecked, and we ended up in the weird situation where Apple became the self-appointed global regulator of consumer privacy on their own devices.

I can’t see AI regulation being anything other than a horse and stable door type situation in the current geopolitical climate. Which arguably pushes the responsibility back on us as an industry to wrestle with the challenges and lead more effectively in terms of protecting consumer data and business knowledge.

Waves of AI

Around 2018, analytics had a big AI Wave centred around machine learning, and suddenly every analytics and marketing platform was “AI Enabled”. What was new? Nothing much in fact: most of the algorithms being deployed (e.g. to do some basic segmentation or forecasting) had been around for decades, and some were simply rebranded centuries old maths and nothing to do with an AI revolution at all.

The real AI wave around machine learning eased in more subtly from that point, fuelled by the availability of compute, and specifically the proliferation of cloud and open source. As soon as well-maintained machine learning toolkits were openly available as Python packages that could be run in the cloud at negligible cost, the previous platform barriers to entry (expensive servers and licenses) started to evaporate.

With those platform barriers to entry swept away, the increasing gap was practical skills and experience –moving models beyond proof of concept and into production and integrating with the business process. Interestingly, many of the machine learning models we successfully deploy for aspects such as pricing stop getting called AI by the client as soon as they are operational: when it works it’s just “automation” or “optimisation”.

So, does that previous wave of AI help to anticipate the impact of the current wave around generative AI on data and analytics? Certainly the availability of compute is again spearheading progress, and aspects of open source (or at least open model weights) are fuelling the competitive bleeding edge.

Interfaces are Key

What is different this time is the accessibility of the human interface – in theory the final technical barrier to entry swept away. In a world where anyone can prompt to an apparently unrestricted range of outcomes, where does data, knowledge and experience fit in?

Analysts need accessible, timely and well-structured data that they can trust to base analysis on, and models and agents are no different. And lots of businesses still struggle to arm their human analysts with the right data at the right level of detail to do their jobs effectively.

RAG (Retrieval Augmented Generation) allows LLMs to tap into live first party data (e.g. live transactions) but can only be as good as that first party data in terms of quality and availability.

There are also opportunities to turn RAG on its head and use LLM research to feed closed loop machine learning models with additional research context (e.g. new features for forecasting), if there is a good approach to ongoing testing and optimisation.

Finally, emerging (albeit competing) standards like Model Control Protocols (MCPs) give broader interfaces for LLMs interacting with other systems, but in an enterprise need very well-defined governance to manage risks and avoid data breaches.

These behind-the-scenes interfaces are going to be the critical success factors for enterprise AI implementations. I believe this is the biggest built-in hedge in data and analytics right now: humans and machines need to be fed with well-structured and trusted data, and the data strategy fundamentals are very similar irrespective of the consumer.

This latest AI wave just means the businesses that aren’t on top of their data will fall behind much more quickly.

Context is Value

The most important and underrated analyst skill has always been the ability to interpret data in the context of a business and all its nuances: the real bridge between insight and execution and outcomes. But context requires the bringing together of knowledge, and interpretation requires critical thinking.

Can GenAI bridge, or help to bridge, that context and critical thinking gap? Critically, models can only feed off the data made available to them, and when every vendor is sticking a GenAI layer (and cost) onto their platform there is a real risk that valuable proprietary context gets siloed within those platforms.

And because capital flows determine that only a very limited number of players can win the race to train the best overall model, ultimately a limited number of models will end up licensed to other vendors to repackage, further homogenising those layers of siloed context.

It’s AI bloat, and eventually businesses will need to consider the multiplying cost impact. Or, more importantly, unless businesses own and consolidate their own proprietary context that leads to executing on those platforms with a competitive edge (based on better data and hence knowledge), that bloat is simply a race to the bottom in terms of performance rather than an investment.

So, while the current AI wave might be focused on “AI everywhere”, the tenants of data strategy and what makes proprietary insight valuable are not going anywhere. Context is value, and businesses will ultimately need to train models on their own consolidated data to be competitive and prosper.

The Next 20 Years

I wonder how many AI waves there will be over the next 20 years. Will we notice them as they pass, or will it be the more subtle shifts underneath those waves that turn out to be more impactful when we look back?

For those of us that had the fun of working through the dot com boom and bust of the early noughties, it’s hard not to see at least some parallels with the investment patterns and short-term expectations right now. But it’s also an important reminder of how the impact of a technology revolution can sweep in beneath the initial hype once that hype has subsided and turn entire industries on their heads.

Ultimately, interfaces are key, and context is value in data and analytics. I believe this will continue to define the relationship between smart humans, smart technology and real business outcomes in this industry.

The post 20 Years of Analytics: What Does the Data Say? appeared first on Lynchpin.

(Video) How to customise your Report library in GA4 20 Jun 9:01 AM (6 months ago)

In the latest episode of our ‘Calling Kevin’ video series, we show you how to customise your GA4 report library – updating your Google Analytics reporting interface to include a new, personalised collection of reports.

Follow these quick and easy steps to begin tailoring your GA4 report library menu and navigation for a more efficient reporting experience. We also share a helpful refresher on how to work with topics, templates, plus more!

For more quick GA4 tips, be sure to check out other videos from our ‘Calling Kevin’ series.

The post (Video) How to customise your Report library in GA4 appeared first on Lynchpin.

Data pipelines using Luigi – Strengths, weaknesses, and some tops tips for getting started 1 May 8:38 AM (7 months ago)

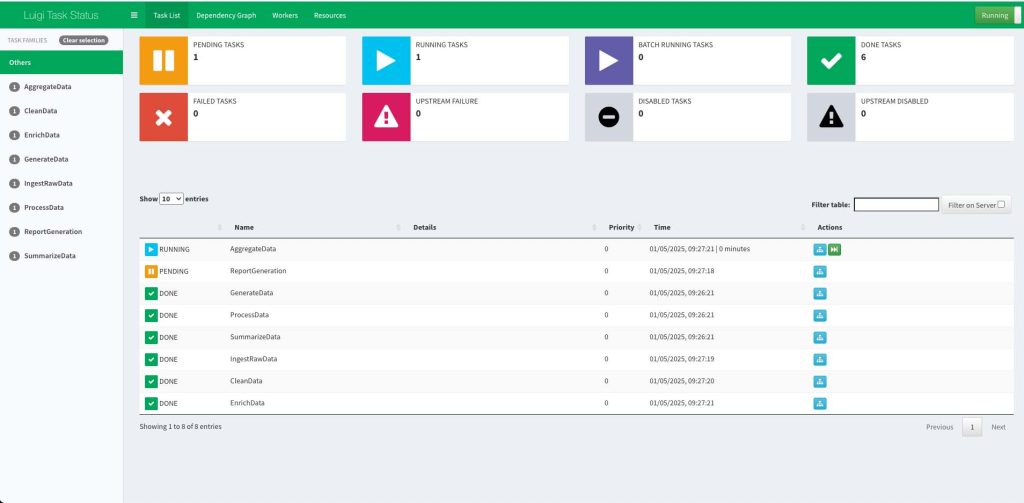

At Lynchpin, we’ve spent considerable time testing and deploying Luigi in production to orchestrate some of the data pipelines we build and manage for clients. Luigi’s flexibility and transparency make it well suited to a range of complex business requirements and to seamlessly support more collaborative ways of working.

This blog post draws from our hands-on experience with the tool – stress-tested to really understand how it performs day to day in real-world contexts. We will begin by walking through what Luigi is, why we like it, where it could be improved, and share some practical tips for those considering using it to enhance their data orchestration processes and data pipeline capabilities.

What is Luigi and where does it sit in the data pipelines market?

Luigi is an open-source tool developed by Spotify that helps automate and orchestrate data pipelines. It allows users to define dependencies and build tasks with custom logic using Python, offering flexibility and a fairly low barrier to entry for its quite complex functionality.

Despite Spotify’s introduction of a newer orchestration tool, Flyte, Luigi is still widely used by many major brands and continues to receive updates – allowing it to continually mature and become a reliable choice for a range of data orchestration use cases.

Luigi sits amongst many popular tools used for data orchestration in the data engineering space – some of which are paid, while others are similarly open source.

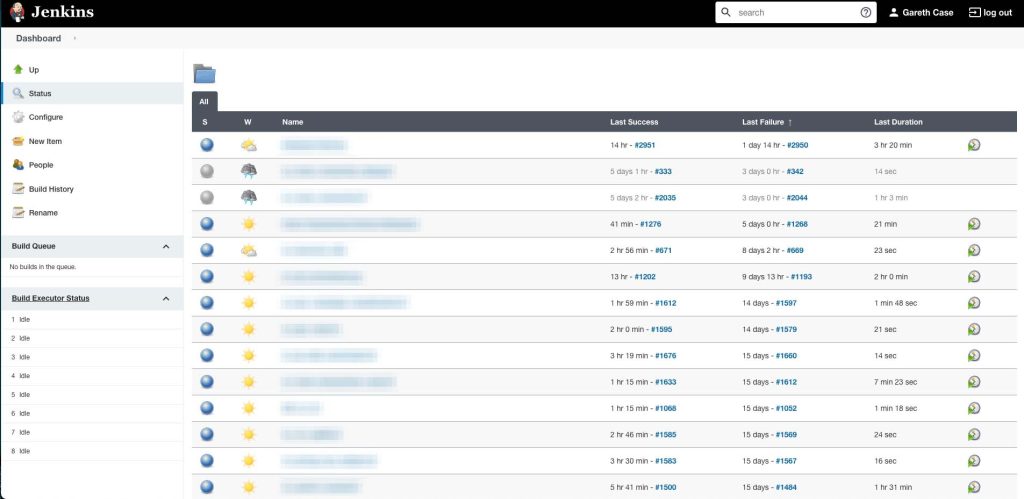

Another tool we’ve used for data orchestration is Jenkins. Although it isn’t designed for more heavy-duty pipelines, we’ve found it to work very well as a lightweight orchestrator, managing tasks and dependencies.

In the following section, we’ll break down some benefits of using Luigi for your data pipelines and a few reasons why you may choose it over a comparable tool such as Jenkins.

What we like

Transparent version control:

One of the key advantages of Luigi is that it’s written in Python. This gives you transparent version control over your data pipelines – every change is committed and traceable: you know exactly what change has been made, you can inspect it, you can see who did it, and when it was done. This becomes even more powerful when linked to a CI/CD pipeline, which we do for some of our clients, as this means that any change to the pipeline in the repository is automatically the truth.

With Jenkins, for example, changes can be made and it’s not necessarily obvious what was changed or by which team member (unless explicitly communicated) – which becomes increasingly important when you’re managing more complex data pipelines with many moving parts and dependencies.

Dependency handling and custom logic capabilities:

Managing data pipeline dependencies is where Luigi truly stands out. In a tool like Jenkins, downstream tasks can be orchestrated but this often requires careful scheduling or wrapper jobs, which can get complicated and quite manual as a process depending on the complexity of your needs. Luigi simplifies this and enables smoother levels of automation by allowing you to define all dependencies directly in Python, allowing for logic such as: ‘Run a particular job only after a pipeline completes, and only do this on a Sunday or if it failed the previous Sunday.’

This level of custom logic is trivial in Python but can be difficult to replicate in Jenkins, where perhaps the only option is to run on a Sunday without any conditions surrounding it.

Pipeline failure handling:

Luigi considers all tasks idempotent. Once a task has run, it’s marked as ‘done’ and won’t be re-run unless you manually remove its output. This is a particularly useful feature if you have big, complex pipelines and only need to re-run certain jobs that have failed. You won’t need to re-run everything, but can find the failed task, delete its output file, and save time when re-executing the job.

Backfilling at the point of a task:

Luigi handles backfilling easily by allowing users to pass parameters directly into tasks.

This allows you to retrieve historical data (for example, backfilling from the beginning of last year to present) without having to change the script or config files.

Luigi will treat tasks with parameters like new tasks, so if the job had previously run, it’ll recognise the changed parameters and simply pass those parameters through.

Efficiency to set up, host, and use alongside existing infrastructure:

While tools such as Apache Airflow may require a Kubernetes cluster (and more) to begin running, Luigi, by contrast, is far simpler to host. You can run it on a basic VM (Virtual Machine) or through a tool like Google Cloud Platform, using a Cloud Run job. This makes it a great choice for smaller data pipelines or client-specific pipelines where you may want to decouple from the main infrastructure.

Market maturity and active use and development by many large brands:

Luigi is used by many users – including a host of major brands over the years, such as Squarespace, Skyscanner, Glossier, SeatGeek, Stripe, Hotels.com, and more. This is integral to its maintenance and viability as a good open-source tool. Its core functionality rarely changes, making it a stable and reliable choice for users; We found that any updates we’ve experienced are primarily focused on maintaining security rather than big rehauls to its functionality, which brings us to a few of its shortfalls…

What we don’t like

Limited frontend and UI:

Luigi’s frontend leaves a lot to be desired. Firstly, it only really shows you jobs that are running or have recently succeeded in running, so if you have many running jobs in one day, the History tab fails to give you a strong overview of information.

When something fails, you’ll be notified, and you can inspect logs in a location that you previously specify, however it would be nice if the frontend provided a good summary of this information instead.

Workarounds do exist, such as saving your task history (e.g., tasks that ran, the status, how long they took, etc) in a separate table (for example, Postgres) where it can be visualised in an external run dashboard – providing a more personalised frontend for better monitoring, visibility into run times, failure rates, and so on.

Setting something like this up would provide more feature parity with a tool such as Jenkins, which, by contrast, does a great job at providing stats and visual indicators for task history, job health, what’s running, and more – right out of the box.

Documentation could be improved:

While Luigi provides all the key documentation you need, it’s not always the easiest to find or navigate – this, when compared to tools such as dbt, makes documentation as a whole feel sparse in places, especially when dealing with more advanced features or plugins.

For instance, helpful features such as enabling dependency diagrams or tracking task history involves installing separate modules, which is a process that isn’t particularly well-explained in their official documentation.

In many instances, users may find themselves gaining the most clarity about how the tool works by trying things out and learning as they go.

Python path issues – everything must be clear or else Luigi will struggle to find it:

To avoid a barrage of ‘module not found’ errors, Luigi will need to know exactly where everything lives in your environment.

A workaround we found useful is creating a Shell Script that sets out all necessary paths and everything Luigi may possibly need to run successfully.

While something like this may take a little time to set up, it’s a small level of upfront effort to improve your workflow in Luigi and avoid any issues in the longer run.

Our top tips for getting started: (Data pipelines using Luigi)

- If one of your tasks fails, make sure you delete your output file before running it again. If Luigi registers an output file, it’ll automatically assume the task is done, and therefore skip it in the re-run, assuming it was completed successfully.

- To make up for Luigi’s limited frontend, we think it’s worth your time to set up your own custom run dashboard to monitor tasks and compensate for a UI which falls short of its competitors and doesn’t provide a tidy and complete overview of tasks.

- For a smooth and pain-free setup, we recommend using a Shell Script to handle Python paths and prevent any issues that may cause files from being easily located by Luigi.

- Be prepared to dive in and get your hands dirty to really understand how things work in Luigi. Documentation is thin in places or sometimes hard to find when compared to other tools on the market, so you may find there is a bit of a learning curve or trial and error process to be aware of.

Conclusion:

We think Luigi is a powerful data orchestration tool for anyone comfortable with Python, who has experience managing data pipelines, and is comfortable getting to grips with a few of its quirks that may make onboarding a bit challenging.

If you’re looking for an alternative to tools like Apache Airflow or Jenkins, Luigi is definitely worth trying out. While we recognise that its UI and documentation are lacking when compared to other tools in this space, we found that Luigi’s version controlling, dependency handling, and logic capabilities make it a handy tool for a range of our clients’ use cases.

For more information on how we can support your organisation with data pipelines and data orchestration – including custom builds, pipeline management, debugging and testing, and optimisation services – please feel free to contact us or explore the links below

The post Data pipelines using Luigi – Strengths, weaknesses, and some tops tips for getting started appeared first on Lynchpin.

Automated testing: Developing a data testing tool using Cursor AI 11 Dec 2024 8:17 AM (last year)

In this blog, we discuss the development of an automated testing project, using the AI and automation capabilities of Cursor to scale and enhance the robustness of our data testing services. We walk through project aims, key benefits, and considerations when leveraging automation for analytics testing.

Project Background:

On an ongoing basis, we upgrade a JavaScript library that we manage, to include a number of improvements and enhancements, which is deployed to numerous sites. The library integrates with different third-party web analytics tools and performs a number of data cleaning and manipulation actions. Once we upgrade the library, our main priorities are:

Feature testing: Verify new functionality across different sites/environments

Regression testing: Ensure existing functionality has not been negatively affected across different sites

To achieve this, we conduct a detailed testing review across different pages of the site. This involves performing specific user actions (such as page views, clicks, search, and other more exciting actions) and ensuring that the different events are triggered as expected. We capture network requests for outgoing vendors, such as Adobe Analytics or Google Analytics through the browser’s developer tools or a network debugging tool (e.g., Charles) and verify if the correct events are triggered and relevant parameters are captured accurately in the network requests. By ensuring that all events are tracked with the right data points, we can confirm that both new features and the existing setup are working as expected.

Project Aim:

To optimise this process and reduce the manual effort involved, we developed an automated testing tool designed to streamline and speed up data testing. As an overview, this tool automatically simulates user actions on different sites and different browsers, triggering the associated events, and then checks network requests to ensure that the expected events are fired, and the correct parameters are captured.

Automated Testing Benefits:

In the era of AI, automation is a key driver of efficiency and increased productivity. Automating testing processes offers several key benefits to our development and data testing capabilities, such as:

- Reduces setup time and creating testing documentation: We’re able to run through different tests and scenarios with a one-time setup for each site and each version.

- More accurate data testing: With a thought-out test plan which is followed precisely, we’re able to put more trust in our testing outcome. This helps us identify issues quicker.

- Better test coverage: We can run tests on different browsers and devices, using the same setup.

How We Did It:

We chose Python as the primary scripting language, as it offers flexibility for handling complex tasks. Python’s versatility and extensive libraries made it an ideal choice for rapid development and iteration.

For simulating a variety of user interactions and conducting tests across multiple browsers, we selected Playwright. Playwright is a powerful open-source automation tool/API for browser automation. It supports cross-browser data testing (including Chrome, Safari, Firefox), allowing us to validate network requests across a broad range of environments.

We used the Cursor AI code editor to optimise the development process and quickly set up the tool. Cursor’s proprietary LLM, optimised for coding, enabled us to design and create scripts efficiently, accelerating development by streamlining the debugging and iteration process. Cursor’s AI-assistant (chat sidebar) boosted productivity by providing intelligent code suggestions, speeding up debugging and investigation. We’ll dive into our experience using Cursor a bit further in the next section

Lastly, we chose Flask to build the web interface where users can select different types of automated testing. Flask is a lightweight web framework for Python, which we’ve had experience with for other projects. It has its pros and cons, but a key benefit of this project was that it allowed us to get started quickly and focus more on the nuts and bolts of the program.

Our Experience with Cursor:

Cursor AI played a crucial role in taking this project from ideation to MVP. By carefully prompting Cursor’s in-editor AI assistant, we were able to achieve the results we wanted. The tool allowed us to focus on the core structure of the program and the logic of each test without getting bogged down in documentation and finicky syntax errors.

Cursor also gave us the capability to include specific files, documentation links, and diagrams as context for prompts. This allowed us to provide relevant information for the model to find a solution. Compared to an earlier version of Github’s copilot that we tested, we thought this was a clear benefit in leading the model to the most appropriate outcome.

Another useful benefit of Cursor AI was the automated code completion, which could identify bugs and propose fixes, as well as suggest code to add to the program. This feature was useful when it understood the outcome we were aiming for, which it did more often than not.

However, not everything was plain sailing, and our experience did reveal some drawbacks to using AI code editors to be mindful of. For example, relying too much on automated suggestions can distance yourself from the underlying code, making it harder to debug complex issues independently. It was important to review the suggested code and use Cursor’s helpful in-editor diffs to clearly outline the proposed changes. This also allowed us to accept or reject these changes, giving us a good level of control.

Another drawback we noticed is that AI-generated code may not always follow best practices or be optimised for performance, so it’s crucial to review and validate the output carefully. For example, Cursor tended to create monolithic scripts instead of separating functionality into components, such as tests and Flask-related parts, which would be easier to manage in the long term.

Another point we noticed was that over-reliance on AI tools could easily lead to complacency, potentially affecting our problem-solving skills and creativity as developers. When asking Cursor to make large changes to the codebase, it can be easy to just accept all changes and test if they worked without fully understanding the impact. When developing without AI assistance (like everyone did a couple of years ago), it’s better to make specific and relatively small changes at a time to reduce the risk of introducing breaking changes and to better understand the impact of each change. This seems to be a sensible approach when working with a tool like Cursor.

What We Achieved – Efficiencies Unlocked:

The automated testing tool we developed significantly streamlined and optimised the data testing process in a number of key ways:

- Accelerated project development: Using Cursor AI, we rapidly moved through development and completed the project in a short period. The AI-driven interface, combined with Playwright’s capabilities, sped up our debugging process—a major challenge in previous R&D projects. In the past, we often faced delays due to debugging blockers, but now, with the AI assistant, we could directly identify and fix issues, completing the project in a fraction of the time.

- Built a robust, reusable tool: The tool is scalable and flexible, and can be adapted for different analytics platforms (e.g., Google Analytics, Meta, Pinterest). It is reusable across different projects and client needs, as well as different browsers and environments.

- Time efficiency & boosted productivity: One of the most valuable outcomes was the significant reduction in manual testing time. With the new automated testing tool, we ran multiple test cases simultaneously, speeding up the overall process. This helped us meet tight project deadlines and improve client delivery without sacrificing quality. Additionally, it freed up time for focusing on challenging tasks and optimising existing solutions.

Conclusion:

With AI, the classic engineering view of ‘why spend 1 hour doing something when I can spend 10 hours automating it?’ has now become ‘why spend 1 hour doing something when I can spend 2-3 hours automating it?’. In this instance, Cursor allowed us to lower the barrier for innovation and create a tool to meet a set of tight deadlines, whilst also giving us a feature-filled, reusable program moving forwards.

For more information about how we can support your organisation with data testing – including our automated testing services – please feel free to contact us now or explore the links below

The post Automated testing: Developing a data testing tool using Cursor AI appeared first on Lynchpin.

(Video) Applying RegEx filtering in Looker Studio to clean up and standardise GA4 reporting 15 Nov 2024 7:36 AM (last year)

In the latest episode of our ‘Calling Kevin’ video series, we show you how to clean up and filter URLs using a few simple expressions in Looker Studio.

By applying these Regular Expressions (RegEx), you can easily remove duplicates, fix casing issues, and tidy up troublesome URL data to standardise GA4 reporting – just as you would have been able to in Universal Analytics.

Expressions used:

- To remove parameters from a page path: REGEXP_EXTRACT(Page, “^([\\w-/\\.]+)\\??”)

- To remove trailing slash from a page path: REGEXP_REPLACE(Page, “(/)$”, “”)

- To make a page path lowercase: LOWER()

Combined: LOWER(REGEXP_REPLACE(REGEXP_EXTRACT(Page path + query string, “^([\\w-/\\.]+)\\??”), “(/)$”, “”))

For more quick GA4 tips, be sure to check out other videos from our ‘Calling Kevin’ series.

The post (Video) Applying RegEx filtering in Looker Studio to clean up and standardise GA4 reporting appeared first on Lynchpin.

Webinar: Navigating Recent Trends in Privacy, Measurement & Marketing Effectiveness 3 Oct 2024 1:54 AM (last year)

How do you know what’s working and not working and plan for success as the tides of digital measurement continue to change?

The themes of privacy, measurement and marketing effectiveness triangulate around a natural trade and tension: balancing the anonymity of our behaviours and preferences against the ability for brands to reach us relevantly and efficiently.

In this briefing our CEO, Andrew Hood, gives you a practical and independent view of current industry trends and how to successfully navigate them.

Want the full-length white paper?

Building on the themes introduced in the webinar, our white paper lays out an in-depth look at the privacy trends, advanced measurement strategies, and balanced approach you can take to optimise marketing effectiveness.

Unlock deep-dive insight and practical tips you can begin implementing today to guide your focus over the coming months.

To access a copy of the slides featured in the webinar, click the button below

The post Webinar: Navigating Recent Trends in Privacy, Measurement & Marketing Effectiveness appeared first on Lynchpin.